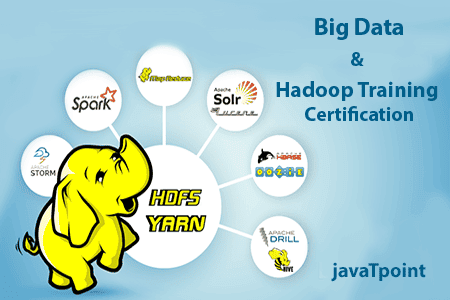

Hadoop Bigdata is an open-source software framework that support data intensive distributed Applications. Actually Hadoop is a MapReduce process in which the application is divided into many small fragments of work each of which may be executed on the nodes of Hadoop Clusters. MapReduce framework assigns work to the nodes in a cluster. In the Hadoop environment, we can able to work with petabytes of data by using HDFS [Hadoop Distributed File System] which is a Scalable and Portable File System of Hadoop Framework.

Hadoop provides a way to store large amounts of data in petabytes and zettabytes. This storage system is called as Hadoop Distributed File System. Hadoop brings a new way to store and analyze data Android Development Course. Since it is linear scalable on low cost commodity hardware, it removes the limitation of storage and compute from the data analytics equation.

Hadoop uses Map Reduce. It follows ‘Divide and Conquer’. The data is organized as key value pairs. It processes the entire data that is spread across countless number of systems in parallel chunks from a single node. Then it will sort and process the collected data.

Our advanced professional certification programs and high-quality mock courses serve as a gateway for the learners interested in starting their career in the Global IT sector.

|

Robust Support

|

From Top Level Industry

|

|

Best Faculties

|

Ample Experience

|

|

Live seminars

|

Reliable Certification

|

|

No 1: Trainers

|

Unrelenting Dedication

|

|

High-tech Lab

|

Placement assistance

|

Get a strong foundation in Big Data concepts

To analyze, store, manage the unstructured data

Planning and implement a big data strategy for the organization